Ever gone through the experience of staying up a couple of nights to resolve a crisis situation due to a problem that was 'known' ?! Doesn't feel good does it, yet we keep on making the same mistake. An open discussion on best practices in the area of IT systems management, quality, prevention of crisis, etc.

Tuesday, November 20, 2007

It's not done until it's deployed and working!

Scripting or otherwise automating the deployment of an application is an invaluable aid to the whole development process. It speeds the process, thereby reducing the code/test feedback cycle. Even more importantly, it makes the process repeatable. The same script used for deployment to test should be used for deployment to production, thereby exercising the deployment scripts as part of the test cycle.

Likewise, if your project has difficulty with deployments, having a developer present during production deployments will pay dividends. There is nothing like first hand experience for bringing home to the development team the issues faced when their application is used in anger.

Until you have confidence that your deployment will go perfectly every time, involve the development team in every production deployment. And make automated deployment a requirement of every development project.

Sunday, November 11, 2007

wikipedia

I am often faced with situations I have absolutely no technical information or background on. All Believe it or not, in many situations, all I have as starting tools are my instincts. However, this is rapidly corrected by good ol' google search and wikipedia lookups although, I still do miss speed reading documentation in book form.

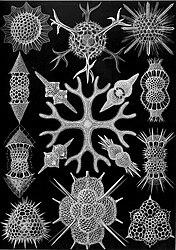

On vacation with nothing better to do than just relax, I came across these amazing pictures ..

http://en.wikipedia.org/wiki/Wikipedia:Featured_pictures

Friday, November 2, 2007

double your broadband speed

I have been struggling with my DSL service since I moved into this new home. For some reason, my modem was training to 4M instead of the 8M in my previous home. My first reaction was acceptance that this was due to the distance factor from the exchange (which IS a significant factor). However, after months of resentment that life should be better, I decided to do something about it.

I had suspected that my internal home wiring was a factor. I knew that I should get a boost by changing things around, however, did not expect the level of impact. 15 minutes of investment boosted my speed from 4 MB to 8 MB.

So here is what I did and some explanation of what was causing the problem.

In the picture above, scenario A is your typical home internal wiring. The pair comes into the home through a special wall plate (NTE) and is then distributed around the home. This is a spiderweb typically. Worse, there may be other devices using the phone lines .. intercoms, alarm systems, pay-per-view box etc. The ADSL modem is typically on the end of one of these legs so, the signal to the modem has the interference and loss caused by the spiderweb of internal home wiring in addition to the normal loss and interference.

Scenario B uses a special device called an NTE5 central adsl splitter that plugs into the wall-

plate where the copper pair from the exchange enters your home (you will have to do a mini external home survey to find where the pair disappears into the wall into your home).

plate where the copper pair from the exchange enters your home (you will have to do a mini external home survey to find where the pair disappears into the wall into your home).You can see from the diagram that the signal to the modem does not have any of the issues with the internal home wiring. This also eliminates the need to put splitters on each and every outlet that has a phone.

This is a 15 min job. Really trivial. Only limitation is that your ADSL modem now has to be located and plugged into this wall plate only. Here is a good link / site that explains further.http://www.broadbandzone.co.uk/shop/centralisedfilter.html

The issue of having the ADSL modem locked into one place possibly far away from a computer is really a non-issue now-a-days. Two options / reasons : most ADSL modems now have WIFI built in (G or N standards will be more than enough). Alternately, there are powerline ethernet devices that work very well. What these devices do is basically make your internal home power (220V) cables into a transmission network for ethernet. Fairly pricey still, however, extremely flexible and they work !! I use the NetGear Powerline Ethernet HD adapters and have no complaints.

Thursday, November 1, 2007

Maturing IT support - framework / model

I find the model I created useful in evaluating where teams stand in their maturity and the kinds of things I ask them to focus on to move up the value chain and improve their performance.

An example, to move from a state of 'managed' to 'measured', I ask teams to put in place measures in the following areas :

A> Business KPI reporting in the context of the system being measured. B> Measures around the utilization of the system (beyond CPU etc.). The most basic is a graph of concurrent user logins at 15 min intervals. More sophesticated is transactional level measures. C> Systems availability reporting which of course is always 99%+. A better way is measuring business impact i.e. #minutes downtime / call centre agent / month.

I've fallen and I can't get up

When questioned about the technical details, same old pattern. Lack of understanding of underlying middleware, database, 3rd party tools etc.

When a team displays such a lack of understanding, what I hear is the team saying to me - "I can code it, however, I don't know how to actually make it work !"

Performance considerations are intrinsic to good development practices & design. While a focussed effort on performance optimization for a week using a highly skilled team always yields amazing results, it is a bad idea to deliver under that assumption.

This is often the simple difference between average and good teams.

Sunday, September 16, 2007

reuse

IMHO, I break reuse within IT into the following stages :

Stage 0 : Reuse teams (just this gets you 60% of the way)

Stage 1 : Reuse design patterns (typically effected by having a clearly articulated architecture and some governance frameworks). This however, may be a legacy of waterfall methodologies.

Stage 2 : Reuse software (libraries, SOA, components etc.)

Saturday, July 7, 2007

on monitoring enterprise shared/common components

First my rant, as this cost me 3 days of my life and a weekend away from my family. While quite a nifty tool and what appears to be a highly scalable platform, I was not prepared for the level of 'blindness' to simple things like throughput and response time. You can get all sorts of information about connections, threads etc. however, the information isn't sufficient within the package to fully monitor what is going in and out of this black-box. Also, no historical graphs in the monitor ? Wait, that is another product you have to buy from CA ? Wouldn't it be awesome if only we could reset the stats/counters at run-time ? That way, you could tune, then reset the stats/counters & re-measure.

OK. Got that off my chest and it wasn't completely venomous. Believe me, its been a tough week.

Seriously, shared infrastructure typically have really compelling business cases and yes, there are truly efficiencies to be gained, however, effective monitoring becomes absolutely critical. All eggs in one basket means you save on baskets and runners, however, the stakes for a mistake go way up !! So you better be careful.

Shared infrastructure is also more complex to model and monitor, specifically when you are dealing with layered distributed systems. Eg., in the Siteminder model, there are agents that consume transactions (may be locally cached) from a series of policy servers which in turn consume transactions from downstream services, eg. LDAP directory servers, authentication servers ...

In such a framework, I would closely monitor the following aspects :

- daily/hourly transaction arrival rate from each agent (and the cache rate).

- transaction response time variance (this is a sign of downstream bottlenecks)

- resource consumption in the policy server (no. of threads active/in-use) .. monitor at peak

- above three for each of the downstream consumables.

If your application support team can do this, they have the first clue about what is going on within their framework else, guess what, tomorrow you may go through what I just went through this past week.

Wednesday, April 25, 2007

on the dark arts of search engine optimization

My quest is to get a hit in google search using a combination of my name and 'knownbugs' keywords. Should be unique with the top hit bringing me to the website hosted on google blogspot .. big assumption being the search engines give you results ordered by relevance (occurence of all keywords).

Well, not quite so. So, reading up on recommendations, I first researched tagging. Technorati tags is an emerging 'power player' in the world of blogs. Supposedly, 'labels', 'titles', 'headers' in blog content/articles should be automatically picked up by the Technorati engine (invoked when you 'ping'). Alternatively, you can force a tag by using the 'Technorati Tags' method.

Even so, this only makes your tags visible within Technorati's blog search. For the normal google internet search, blog content hosted on blogspot appears invisible, however, on google's blog search, it works.

I also did the wait 30 days and magic will happen thing. This is what some recommend as the time it takes for spiders to crawl your content.

So, further tricks/tips. I am now in the process of getting a custom domain name. Godaddy.com offers cheap registrar services. My selection - www.knownbugs.org (or .info, .net, .biz .. unfortunately, .com was taken by someone who wants to make money by selling the name).

What a custom domain name will do, is treat the blog content as regular www content and hopefully allow the search engine 'spiders' to index the content making it visible in the regular google search world.

I suspect, I will find other gotchas as there clearly is money at play here.

Instead of us being in the age of 'content in king', we appear to be living in the age of 'content control is king'. Here is where there is a war going on. The behemoths - google, microsoft, yahoo .. all at play.

'Influencing' search engines is worth a lot of money nowadays. A massive amount of complexity behind the scenes with 'SEC' or search engine optimization being a real growth area. I worry !

I worry about such central control on information access, however, hacks like us always have a way of breaking free.

More as my quest progresses !! Am still waiting for the DNS servers to update so expect to be redirected to www.knownbugs.org when you go to knownbugs.blogspot.com shortly.

Tuesday, April 24, 2007

common pitfalls in outage/crisis situations

1> Trial and error

Don't fall for this. You know you are in trouble when you get ambiguity from the technical teams. If you are stuck, ask yourself what can you do to get additional information onto the table. Often, you will find teams stuck 'enjoying' the problem because they have no method for infusing new information or experience into the problem diagnosis. Doing things trial & error mode also force you into a sequential analysis mode. Also, this leads to the '2 hr ETA bait' (see below).

2> Debugging in live

While prevention of recurrence is key and that requires some investment in analysis / data collection during an outage, it MUST be capped. Do not fall into the trap of ... "give me 20 more minutes, I have almost figured it out ..". You will hate yourself later. Walk into the situation with a time limit in your mind upon which you will trigger a failsafe way of restoring service. Communicate that upfront to the team (I am assuming that you have a failsafe procedure to restart the system to restore service that you will trigger on .. may be something as simple as rebooting the servers). Of course, best practice is that you always execute the failsafe and never debug in live, however, that requires a significant investment in test infrastructures that are capable of reproducing the problem. Remember, there is a value to getting to true root cause as that is the only way you will prevent recurrence.

3> Sequential analysis

Distinguish 'sequential analysis' from 'sequential execution'. Sequential execution is good, sequential analysis is BAD. On the analysis side, you should try to split off multiple teams (assuming you have the resources) on different aspects of the problem. That allows you to cover all bases quickly vs. an elongated recovery path where you are problem solving only one thing at a time. Sequential execution is GOOD because you want to introduce only one variable at a time else, you will break the cause and effect chain. Usually, problem solving is about eliminating variables and then incrementally fixing one thing at a time using a measured/scientific approach.

4> 2 hr ETA bait ...

Setting expectations on 'expected time to restoral' is really really hard. Here is the dark art of estimation at its finest. Setting no expectation is unacceptable (it will be fixed when it is fixed .. attitude). Your business partners/users will not be as upset about an outage as they will be about setting false expectations. Usually, a significant outage will require operational teams to build workaround/catch up plans where they may have to staff overtime or weekends. These plans depend on your estimates.

And .. what's worse is none of your technology suppliers / partners will co-operate.

On crisis situations with financial implications, vendors get very very conservative or worse, clam up ! In a crisis situation, you always will feel the information is inadequate to make a decision or set an expectation. I usually follow my instincts here (of course, harnessing whatever facts are available on the situation). Don't try this unless you have the right technical experience.

enterprise support from technology partners

Microsoft has really come a long way since that. I was very pleasantly surprized in a recent encounter on how they have matured. Their crisis technical lead was clear, crisp, unfazed by pressure and clearly knew what he was talking about. That instilled confidence. He knew how to distill and present the facts and avoid making false promises. Also, their follow-the-sun model actually worked !! The transitions were seamless with knowledgement transfer occuring behind the scene and a warm hand-off with 1 hr overlap. Their account team was on the ball and follow-up and follow through was perfect. In fact, they chased me !!

Other examples of great support I have received are from BEA. BEA's account manager takes the unique honor of being the only sales guy I know who stuck with me for 36 hours straight during a crisis situation helping with anything he could (including doing the coffee rounds). I never believed a sales guy had that kind of stamina ;-). Oracle's down systems group are also top notch.

Technology partners usually have to support crisis situations remotely. They will depend on you for information and one of the challenges is to be able to supply it to them - real-time. Simple things like file-size limits in your email servers can look like bad ideas in these circumstances. Firewalls are a fact of life so, have a strategy on how your technology partners get access to your systems/intranet when you need them to.

crisis bridge protocol

a> one person speak at a time

b> identify the lead (hopefully you !)

c> people mute when not speaking

d> people not put you on hold (most PBXs will play music for the rest of the participants)

e> mute if you want to have a sidebar conversation

f> remember, if you go to sleep, you will be spotted because of your snoring

g> no calling from a cell phone (or c becomes very important)

h> establish clearly the participants and their role/what function they represent

Traditional conference bridges are slowly evolving into a multimedia facility - IM session in parallel is becoming commonplace with Netmeeting/Livemeeting quickly following. At Qwest, it was nice to have the facility to dial into an 800 number and then select a sub-bridge (option 1..9). That way, the main bridge team could quickly branch sub-teams off without confusion and avoid wasting time on communicating bridge numbers.

Separate out from the start a management bridge, customer bridge and the technical bridge. Chaos ensues if you mix them all into one.

Just my two minutes of brain dump .. will add more as I flesh this out/collect my thoughts.

Systems monitoring - historical views - best practice

There are several tools out there in the marketplace that do this. IBM Tivoli, HP Openview, BMC, EMC SMARTS (and then some), all offer solutions along these lines. The key is to instrument agents / data collectors across the estate (on each server) and have a central database & reporting web-site that allows IT folks to select a node and display historical results around a wide variety of technical aspects. The value seems ambiguous, however, let me tell you that it makes my life much much easier.

When in crisis mode, this data helps immensely. It is crucial data that tells you when something changed. It allows technical teams to quickly focus, analyze and resolve a set of issues that normally are thorny and contribute to large MTTR numbers on problem incidents. Yes, logging into the box and monitoring real time tells you there is a problem, however, a historical view tells you when something changed. Also, this is crucial for another best practice - server capacity management and monitoring.

The key is universal rollout / standardization. Don't get trapped in the technology selection mode. Pick one and implement universally. This isn't difficult work, however, best implemented within your server provisioning process so that anything new automatically has the standardized framework.

It is amazing how telling something as simple as a historical CPU profile is. You see processing/business utilization patterns, exactly when backups occur, batch jobs etc. and more importantly, when something CHANGED.

Tuesday, March 6, 2007

database maintenance

One recurring theme I see with junior dbas is the lack of understanding of the 'analyze table' proceedure. This is crucial for proper database performance. Can be scheduled once a week or more frequently based on system profile.

Basically, the 'analyze table' command gathers statistics on the table and stores them internally as hints for the query optimizer. Indexes are picked up based on these statistics. For dynamic tables (large growth or shrinkage or changes to key indexed fields), it is imperative this is performed frequently. For safety, run it anyway after key database operations.

Know what 'good' looks like for your system. Baseline and save (better still commit to memory) the key behavioral aspects of your system eg. cpu utilization profile, throughput, response times, i/o levels etc. That way, you will know when this profile changes and will be a key trigger for you to action for early detection of problems. More importantly, when you implement change, you should compare the before and after profile of your system.

Milan Gupta

Saturday, March 3, 2007

Change management

I did not put change as the top problem within my post on what keeps me busy because I believe that change is good. It is necessary and as inevitable as evolution. It is necessary for healthy growth. So IT cannot take the simplistic approach of 'if it ain't broke, don't fix it!'.

Any decent sized telco typically has an IT estate of 3000+ systems, 50K+ computing assets and 10K+ people all changing stuff. Is it any surprise that systems availability & stability actually goes up during christmas ?

What is key to establishing a high performance IT organization is managing change effectively. It is instrumental to have a proper inventory of systems, more importantly, their inter-dependencies and impact on business process. Most importantly, a team that understands this model.

While end-to-end testing frameworks can flesh out unexpected side-effects of change, it isn't reasonable to expect that all work will go through this framework. With 10K+ employees, there will be leakage and impact. Specifically around stuff that you would least suspect.

Proper and effective change management framework would consist of the following :

- A team as described above that performs the function of a change approval board (centralized or decentralized)

- An e2e testing framework that certifies each change

- An effective communication framework typically a change ticket process that notifies the relevant enterprise pieces that are potentially impacted by the change

- Leadership & support from the development teams on implementing change.

- Post-implementation verification of change (ideally monitoring key business KPIs before and after the change).

proactive vs. reactive application support

The usual breakdown I observe is in #2 with companies mostly operating in #3. #1 requires a sophesticated business process monitoring infrastructure, something I consider to be still an industry wide problem given state of investment and commitment to such projects within an IT portfolio. Breakdown in #2 is usually an artifact of organizational boundaries and/or poor skillsets & focus. Each team operates in a silo and purely on a reactive basis. A truly dangerous place to be for any CIO.

Thursday, March 1, 2007

synchronous vs asynchronous transactions

Remember - synchronous transactions for time-sensitive stuff. For transactions that a call center rep has to wait on (while customer is on the phone) .. use synchronous backplane eg. web-services. For others (non-time sensitive), use asynchronous. Your messaging architecture MUST support both.

The thing that creates havoc the most in call centers is transaction performance variance. Not always just transaction performance. If something consistently takes 90 seconds, you will find your call center reps work around this poor performance by predicting this period of wait and filling it with other work or small talk with the customer. What makes call center agents mad is transaction variance - sometimes it only takes 4 secs, sometimes 300. That's when the customer on the line gets the embarrassed comments - 'my system is slow .. my system has frozen up ..' etc. etc.

systems availability vs. systems effectiveness

Providing excellent day-to-day service ! What is the role of IT in this ? What is the role of a particular application support team ?

A typical CIO challenge is to take the IT group up the value chain within a company. This applies to all disciplines including providing day-to-day support.

Support teams and IT value is typically stuck at the systems availability monitoring and reporting level. 99.95% uptime. Famous words. We've all heard this. Somehow that 0.05% seems to hide a massive amount of operational impact. Putting that under the microscope usually leads to startling revelations.

An alternate strategy is to focus on systems effectiveness - my name for nothing other than business process monitoring, however, this is a little different. Here, you apply the concepts of business process monitoring in a 'systemy' way.

To clarify, each system typically performs a specific function within a process chain. Systems monitoring at the technical level covers all the engineering aspects of the platform eg.

Database, hardware, CPU, I/O, Filesystem space etc. Usually, this stuff is trapped using tools like HP Openview, BMC etc. and monitored by a 7x24 bridge operation. When alerts are received, automatic callouts are performed with an extra pair of eyes to make sure.

Better groups take this up one level. Monitoring of log files for errors eg. SQL errors, core dumps, etc. However, this is also usually insufficient. Even better groups start getting sophesticated around application level capacity monitoring - eg. thread utilization, queuing behavior and other subtleties around bad jvm characteristics eg. full GCs.

However, that also isn't usually sufficient. The trick is to customize a set of measures that are relevant to the business use of the application and monitor for that. Keep your finger on that pulse and magic happens. Your operational partners will no longer care if the system goes up or down .. they are happy for you to measure based on the business performance. An example of this is to measure performance response time and variance for transactions that are time sensitive - eg. those that a call center application calls on the back-office systems. Alternately, in the case of workflow, some measure of cycle time and right first time (on-time being trivial case of RFT).

Do this and suddenly, you have gone up the value chain and made your life simpler. Your teams grow as they go from being purely reactive to being proactive. Also, more importantly, they learn the operational side of things and recognize exactly the criticality and value of their system in the larger picture.

This isn't anything fancy. I'm not talking about a full-scale business process monitoring framework here. Full BPM requires a standardization of metrics and process and usually abstracts away from the systems design and implementation. For architectures that are a mix of legacy and new, this is usually never perfect either. I'm talking about a simple application of common sense to what you monitor. They challenge is usually understanding the design of the system and extrapolating the meaningful set of measures that the end-to-end business process depends on. This is usually very specific to the design and implementation of the system as the data must be harvested frequently and usually in real-time.

Wednesday, February 14, 2007

Project Execution

1> The right leadership.

Any project must have its technical leadership and its business leadership straight. Yes, this boils down to two people who will challenge each other and maintain the necessary checks and balances.

2> Management & Escalation.

One of the biggest blunders and chaotic environments is where you have non-technical management managing technical work. Recipe for disaster as you will spend you life on escalations that look complex and scary, however, are very simply solved. Also, a lack of understanding of the development cycle usually leads to pre-mature questions from the management which in turn leads to pre-mature decision making, needless work etc. Eg. on one of my projects, being the architect / technical lead, I was asked (by the VP) for a technical specification of the system within the first month of what was a 2 yr project !! Understand that managers want to know when things will get done even before they allow anything to start. Developers will not reliably tell you when things will get done until they are actually done. Such is life and the variability of agile. You are welcome to use waterfall if you want a 100% schedule predictability, however, understand, that you are basically getting 1 unit of work at a cost of 5 (the addl. 4 are padding to manage the risks/unknowns which are inherent in most projects). On structure, you have basically two philosophies, architect/tech lead report to the manager or manager report to the architech / tech lead. I vote for the latter. As long as the manager / project manager understand their role wrt the tech lead / architect, things typically are fine. Watch for this dynamic very very carefully as this is where bad bad decisions are usually made. You do NOT want a non-technical person making a technical decision.

3> Top talent recruiting - the best attract the best. No one likes carrying dead weight. Once you seed the team correctly, this will be self-correcting. I have and never will believe in the 200+ project team size. I have done amazing things with a 40 person development team. Remember, the software design and tools you choose itself brings limitations on how many developers can work concurrently and productively.

4> Pay attention to the learning curve. Things will not progress at the pace you would expect until you have a seed development team that has matured sufficiently around their understanding of the business problem. These will become your technical leads as your project grows. It is wise to invest this learning in the best technical developers from the start as they are the ones who are going to produce the software.

5> Match your technology choices to your developer team skills. If you want your project to be the guinea pig for the 'next cool new tool / technology' .. OK .. but understand your risks. You need the time to get your developers up to speed on this.

6> Establish the right roles within the team from the start.

Architect/Designer, Developer/Tech Lead, Business SME/Tester, Project Manager, Test/Development Environment Manager, Application support lead, Deployment Lead, Integration Lead (Designer), Business Implementation Lead (Training/Comms/Metrics)

7> Get and stay close to the end-user/customer.

The shorter the communication chain between an end user and a developer, the greater the chance of success.

8> Test from the start - your user stories are really test cases in disguise. Pay attention to test data. Test Director is a decent tool to document your tests and track your coverage.

9> Solve the hard problems first. Focus on the unknowns as early as possible. PANIC EARLY !!

Its only great teams that have a 40 hr work week in their final week before deployment. No magic here .. it comes from spending the weekends before that so that you are coasting in style when you near the finish line.

10> Develop/Test with real data as early as possible.

At the early phases of a project, the developers must have flexibility over the testers. This is the best-effort co-operative testing phase. It is extremely frustrating for the testers as the productivity is low. If you are using end-users, you must have alignment, else, you will be fire-drilling all the time trying to manage perceptions of problems from above. This phase of testing is key as your end goal is to get as much early feedback to the developers. During the last phase of the project, the testers must be the enemy of the developers as they move to the 'antagonistic' testing and the user acceptance testing phase. Here, the testers must be the focus with full support from the developers and the test environment leads. Another best practice is to have a repeatable set of test cases rather than a set of testers. This provides a very easy way to manage stakeholders as anybody who wants a say, can review and add to the test cases. The better they are, the better your chances for a quality delivery.

11> Co-locate as much as possible.

NB> Do not assume co-location means same building or floor. Even team members strewn across the floor randomly will not have the same effectiveness as an integration pod or 6 adjascent cubicles housing a sub-team working on a common area. There is truly an amazing effect on productivity. Make the hard call and force this if you are getting into the red zone. I understand the day and age of offshoring, however, in crunch mode, you cannot replace good old co-location with anything. Remember, communication/organizational barriers is the most common problem to integration problems. The main problems will be at the boundaries.

12> Basic software engineering disciplines - makefiles, daily/continuous builds, regression test automation, code reviews, use of software quality and analysis tools (purify, jprobe, ..).

13> If you are using 3rd party tools, understand your risks. There will be issues and it is up to you to design around them. Remember, you will have limited flexibility to fix/modify the 3rd party tool. A third party tool does not relieve you from the need to understand the details.

14> Certain roles go hand in hand with accountabilities and later roles in the project lifecycle eg. it is ideal to have the people who specified the user requirements also be the testers; the solution designers/architects be also intrinsic in the integration / testing / defect resolution of the project.

15> Plan for production - clean up the logfiles - meaningful and concise. Write the required business process monitoring reports that allow you to ensure the platform's effectiveness post production. Do this early as this will allow you to identify gaps in the design / functionality that can make your life extremely difficult post production. A easy example is for a workflow based system, have the ability to take a snapshot of the in-flight jobs and where they are. Have a clear model of expected execution profile so you can catch exceptions, performance issues, bottlenecks, etc.

16> Measure before you implement, measure after you implement .. you are not done until you restabilize the business KPIs (and this will take you a month). Your end-users may not notice things as they are new to the system too .. so, don't expect to get the usual level of guidance from operation on 'problem areas / defects'.

Sunday, February 11, 2007

People

We have all gone through the IT top talent recruiting paradigm. There is however, another angle to the people aspect. What makes better people ?

I have seen organizations that are so highly structured and specilialized in their jobs that they start showing two evils : 1> they no longer are downward scaleable 2> they lose the ability to solve problems efficiently (this shows up of course in my job area).

Here is why.

Imagine the role of a requirements analyst, architect, solution designer, component designer, developer, tester, configuration manager, application support, deployment manager ... the list goes on. Now overlay the technical development aspect with people who are specialized dbas, network designers, hardware / infrastructure designers, bea specialists, mq specialists, iis server/windows/asp specialists, c/c++/UNIX specialists, java/jvm specialists etc. You can start seeing the problem.

Problems usually go through a period of analysis (or finger pointing). This is where having skill sets that span the technology spectrum is important. The difference is night and day between the response time of resolution when you have a single person who knows BEA / Oracle / UNIX / Networking concepts vs. someone who only is a trained BEA person without a thorough knowledge of UNIX kernal parameters or networking concepts. Typically for any large IT organization, this will typically be a 4 or 5 person team.

I grew up in an environment where we executed projects e2e. We conceptualized, we presented, we sold, we interviewed, we architected, we designed, we developed, we tested, we deployed, we installed servers, we supported, we wore the pagers. On the technology side, we made the choices and had to live with them - no excuses. This gave us a very unique perspective.

There don't appear to be many people like me. The newer technologies are allowing people a level of abstraction that is a death sentence to any development that isn't absolutely no-brainer. I see this in the resume stream for even my top talent industry search.

So, is this what companies are breeding ? I don't see the flexibilities to grow across dimensions anymore .. where else are the kids going to get their experience from ?

This is one that does have a fix.

Friday, February 2, 2007

Saturday, January 27, 2007

Default Configuration

This little bugger has already caused me pain twice. For applications consuming web services, this parameter is crucial. Of course, there is much more complexity to IIS server tuning. A good article on this is here. Specifically read the section on threading. This is just a tickler.

MaxConnections controls the number of simultaneous allowable open connections. So, if you have an app consuming web services running more than 2 parallel threads, you have a choke point.

In my world, I was seeing high variances in transaction performance at a particular server. This server was in turn consuming web services from a downstream system. Box was not CPU or I/O bound, however, had the typical CPU profile of a badly scaled application (flattish capped at 40% vs. highly spikey 0-100%). Tweaking this from a default of 2 to 40 changed the transaction profile from a 80th percentile bench of 40 secs, 90th %ile of 150 secs, 99th %ile 300 secs (timeout) to a 99th %ile of 5 secs.

Wow !!! Imagine the surprize the next day for the users ..

Too many people make the mistake of not tuning the windows box before production. I wonder why windows server is configured by default (from a scalability perspective in this regard) the same as my laptop running windows xp ?

In fact, this fundamentally also applies to an end user PC. Increasing this opens up the pipeline for a web browser. Largest impact is for loading web pages that have plenty of little sub-pages etc. each of which can be loaded concurrently. Web admins hate this because it causes 'bursty' traffic conditions on the back end web servers.

This whole issue relates to the need to pay attention to 'default' configuration for infrastructure deployed. By default, things are not 'tuned' and this applies to nearly all products. In fact, some are 'mis-tuned' making it mandatory to 'tweak'.

Windows OS, IIS server, SQL server, UNIX kernel, BEA, Oracle all are some of the standard culprits with varying degrees of guilt. For some, this is more an art than a science. Things are so application dependent that template configurations are impossible.

Pain points

1> Performance

Probably the greatest area of weakness in the IT discipline which appears in direct conflict with our need / necessity to meet timelines. The art of performance testing an application requires the greatest skill level .. a thorough understanding of not only the software design but also of the business use of that software. A comprehensive black box test and simulating the real world is usually an impossible challenge for complex high transaction systems. So what constitutes a barely sufficient approach ? Does it boil down to having the right person do the job vs. a set formula ?

2> Software quality / engineering issues

Bugs Bugs Bugs !! When will we ever figure out the discipline of paying attention to detail and fully understanding the subtle behavioral side effects of the software we write. Cost, Quality & Speed seem to conflict, however, that isnt really so. Agile is a step towards really representing what developers feel, however, as usual, it is more a management buzzword than reality. More books on what it means written by people who have never written a line of code. This symptom however, represents something more fundamental. It is about engineering discipline .. instilling a sense of pride within teams about what we produce all the way to the individual contributing developer.

3> Solutions where complexity has overtaken team skills

This is a good one .. with lower cost sourcing strategies, we are not always sticking with the highest calibre talent. We are seduced too often by the promise of technology eliminating the critical dependence on the developer. An example, buying BEA doesnt mean you are relieved of the duty of understand middleware concepts and more importantly the proper use of BEA.